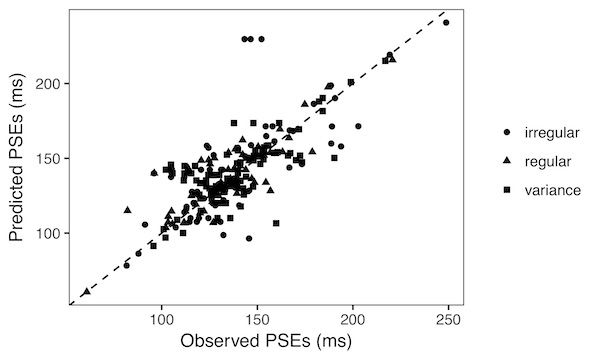

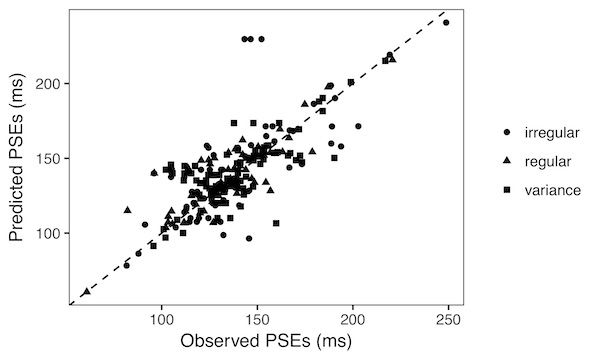

Baeysian Inference

-

What you see depends on what you hear: temporal averaging and crossmodal integration

In our multisensory world, we often rely more on auditory information than on visual input for temporal processing. One typical…

In our multisensory world, we often rely more on auditory information than on visual input for temporal processing. One typical…