Our external environment is often structured and relative stable. Surrounding contexts can be useful for guiding our attention and selection. However, changes of background context can also bias our perception. In this research topic, we aim to investigate the following aspects of contextual learning and modulation in our perception:

- Contextual cueing in visual/tactile search

- Probability cueing in guided search

- Serial dependence and contextual modulation in magnitude estimation

Related Research Projects

DFG Project: Mechanisms of spatial context learning through touch and vision (together with Thomas Geyer)

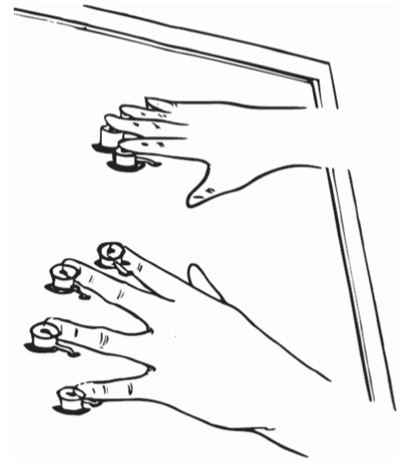

In everyday scenes, searched-for targets do not appear in isolation, but are embedded within configurations of non-target or distractor items. If the position of the target relative to the distractors is invariant, such spatial contingencies are learned and come to guide environmental scanning (“contextual cueing” effect; (Chun & Jiang, 1998; Geyer, Shi, & Müller, 2010). Importantly, such context learning is not limited to the visual modality: using a novel tactile search task, we recently showed that repeated tactile contexts aid tactile search (Assumpção, Shi, Zang, Müller, & Geyer, 2015, 2017). However, the guidance of search by context memory has almost entirely been investigated in the individual modalities of vision and touch. Thus, relatively little is known about crossmodal plasticity in spatial context learning. The present proposal will employ behavioural and neuroscience methods, in addition to mathematical modelling, to investigate context learning in unimodal search tasks (consisting of only visual or tactile search items), and multimodal and crossmodal search tasks (consisting of both visual and tactile search items). In doing so, any specific learning mechanisms (representations) commonly referred to as crossmodal plasticity should be revealed. The three leading research questions concern (1) the factors that may eventually give rise to crossmodal context memory (an example question is whether the development of crossmodal spatial context memory requires practice on a multimodal search task; (2) how context memory acquired in uni- or multimodal search tasks is characterized in ERP waveforms (and whether there are differences in electrophysiological components between these tasks); and (3), how the beneficial effects of context memory on reaction time performance in uni- and multimodal search can be quantified using stochastic Bayesian models.

DFG Project: The construction of attentional templates in cross-modal pop-out search (Co-PI with Thomas Töllner, Hermann Müller)

How do we select information that is relevant to achieve our current action goals from the plethora of information, which streams into our brain continuously via our sensory organs?One prominent notion according to which selection of relevant items may be accomplished is the engagement of “attentional templates”. Following this idea, we may set up an internal mental image of the desired object in working memory (WM), which represents the target’s feature values—including, for example, it’s color, shape, or size. This WM representation may be then linked – via recurrent feedback connections – to early sensory analyzer units, and boost the output of those neuronal units that code the respective target features. As a result, objects containing one or several target features will have a selection advantage relative to objects that contain currently irrelevant features. The second phase of project B1 is designed to explore whether this theoretical framework is limited to visual targets, or, alternatively, can also explain target selection in other sensory modalities (e.g., touch). Re-using our previously established multisensory pop-out search paradigm, we will combine behavioural (reaction times, accuracy) and electrophysiological measures (event-related lateralizations, oscillations) with computational modelling (priors). In particular, we aim at examining whether one-and-the-same attentional template can integrate information from two different sensory modalities. Or, alternatively, whether search for cross-modally defined targets requires the setting up and maintenance of two separate, uni-sensory attentional templates. The outcome of the proposed research programme will advance our understanding of the hierarchical processing architecture that underlies cross-modal search.

Selected Publications

- Chen, S., Shi, Z., Müller, H. J., & Geyer, T. (2021). Multisensory visuo-tactile context learning enhances the guidance of unisensory visual search. Scientific Reports, 11(1), 9439. https://doi.org/10.1038/s41598-021-88946-6

- Zhang, B., Weidner, R., Allenmark, F., Bertleff, S., Fink, G. R., Shi, Z., & Müller, H. J. (2021). Statistical learning of frequent distractor locations in visual search involves regional signal suppression in early visual cortex. In bioRxiv (p. 2021.04.16.440127). https://doi.org/10.1101/2021.04.16.440127

- Allenmark, F., Shi, Z., Pistorius, R. L., Theisinger, L. A., Koutsouleris, N., Falkai, P., Müller, H. J., & Falter-Wagner, C. M. (2020). Acquisition and Use of “Priors” in Autism: Typical in Deciding Where to Look, Atypical in Deciding What Is There. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-020-04828-2

- Chen, S., Shi, Z., Müller, H. J., & Geyer, T. (2021). When visual distractors predict tactile search: The temporal profile of cross-modal spatial learning. Journal of Experimental Psychology. Learning, Memory, and Cognition. https://doi.org/10.1037/xlm0000993

- Chen, S., Shi, Z., Zang, X., Zhu, X., Assumpção, L., Müller, H. J., & Geyer, T. (2019). Crossmodal learning of target-context associations: When would tactile context predict visual search? Attention, Perception & Psychophysics. https://doi.org/10.3758/s13414-019-01907-0

- Allenmark, F., Zhang, B., Liesefeld, H. R., Shi, Z., & Müller, H. J. (2019). Probability cueing of singleton-distractor regions in visual search: the locus of spatial distractor suppression is determined by colour swapping. Visual Cognition, 1–19. https://doi.org/10.1080/13506285.2019.1666953

- Shi, Z., Allenmark, F., Zhu, X., Elliott, M. A., & Müller, H. J. (2019). To quit or not to quit in dynamic search. Attention, Perception & Psychophysics. https://doi.org/10.3758/s13414-019-01857-7

- Zhang, B., Allenmark, F., Liesefeld, H. R., Shi, Z., & Müller, H. J. (2019). Probability cueing of singleton-distractor locations in visual search: Priority-map- versus dimension-based inhibition? Journal of Experimental Psychology. Human Perception and Performance, 45(9), 1146–1163. https://doi.org/10.1037/xhp0000652

- Assumpcao, L., Shi, Z., Zang, X., Müller, H. J., Geyer, T. (2018). Contextual cueing of tactile search is supported by an anatomical reference frame. J Exp Psychol Hum Percept Perform, 44(4): 566-577. doi: 10.1037/xhp0000478.

- Zang, X., Shi, Z., Müller, H. J., & Conci, M. (2017). Contextual cueing in 3D visual search depends on representations in planar-, not depth-defined space. Journal of Vision, 17(5), 17. http://doi.org/10.1167/17.5.17

- Shi, Z., Zang, X., & Geyer, T. (2017). What fixations reveal about oculomotor scanning behavior in visual search. Behavioral and Brain Sciences, 40, e155. http://doi.org/10.1017/S0140525X1600025X

- Zang, X., Geyer, T., Assumpção, L., Müller, H.J., Shi, Z. (2016) From Foreground to Background: How Task-Neutral Context Influences Contextual Cueing of Visual Search, Frontiers in Psychology 7(June), p. 1-14, url, doi:10.3389/fpsyg.2016.00852

- Assumpção, L., Shi, Z., Zang, X., Müller, H. J., & Geyer, T. (2015). Contextual cueing: implicit memory of tactile context facilitates tactile search. Attention, Perception, & Psychophysics. doi:10.3758/s13414-015-0848-y

- Zang, X., Jia, L., Müller, H. J., Shi, Z. (2014), Invariant spatial context is learned but not retrieved in gaze-contingent tunnel-view search, Journal of Experimental Psychology: Learning, Memory, and Cognition. http://www.ncbi.nlm.nih.gov/pubmed/25329087.